EE4750 Tensor networks for green AI and signal processing

This advanced course is intended for 2nd year MSc students.

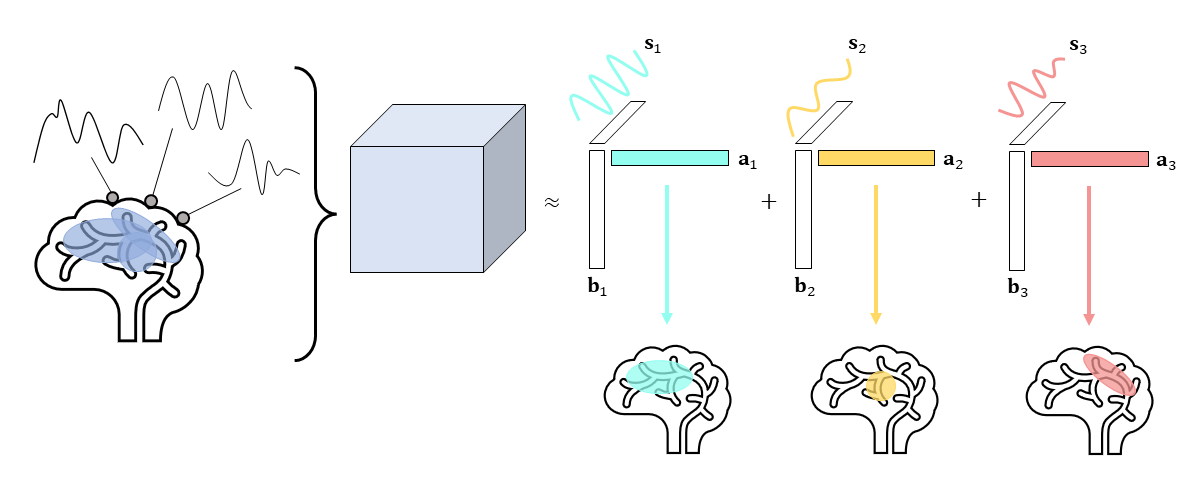

This course provides a solid mathematical foundation to tensor networks and discusses their use in machine learning and signal processing. Tensors are multi-dimensional generalization of matrices. Real-life data often comes in high dimensions, such as of a video stream, or multichannel EEG recorded from multiple patients under various conditions. Tensor-based tools are crucial to efficiently represent and process this data. Alternative (matrix-based) solutions artificially segment such high-dimensional data into shorter one- or two-dimensional arrays, causing information loss by destroying correlations in these data. The key idea of tensor networks is to write a tensor in terms of much smaller tensors that represent these correlations among the different dimensions. On one hand, such representations can reveal hidden structure in the data and can even lead to decompositions with physically interpretable terms. Moreover, in this way, the original tensor never needs to be kept explicitly in memory. This latter aspect becomes especially interesting considering the ever-increasing dataset sizes brought on by recent advances in sensor and imaging technology. Computations based on tensor networks enable faster training and inference of AI models based on such large datasets. Therefore, tensor networks facilitate the shift towards the direction of Green AI. Green AI aims to decrease the environmental footprint of AI computation and increase the inclusivity of AI research by becoming more independent of expensive computer hardware needed for training.

Course contents and description

The first introductory lecture gives a motivation to the use of tensor networks. Various compelling examples from the teachers’ own research experience will be given. Next, the basic notation and the use of tensor diagrams will be presented, and important matrix and tensor operations will be explained, such as various products (inner, outer, Kronecker, Khatri-Rao, etc.), norms, reshaping (matricization, vectorization). These operations and concepts are crucial to understand how matrix decompositions generalize to tensor methods.

This leads to the core of the course content. The most well-known tensor network is the singular value decomposition (SVD) of a matrix. The following two lectures present how the SVD can be generalized to tensors in two different ways, one leading to the multi-linear singular value decomposition (MLSVD), and the other leading to the canonical polyadic decomposition (CPD). The properties, computation and applications of both generalizations will be discussed.

The third tensor network structure that generalizes the matrix SVD are tensor trains (TT). Their properties, computation and applications will also be discussed. TTs are particularly well-suited to represent large-scale vectors and matrices as most of the common linear algebra operations can be done with TTs.

Finally, the last two lectures focus on the application of tensors to various signal processing (e.g. blind source separation, data fusion) and machine learning problems (e.g. kernel methods). For this, we will introduce advanced concepts such as different tensorization techniques, constrained and coupled decompositions.

Throughout the course, various applications will be presented. From the field of biomedical signal processing this includes epileptic seizure localization in EEG, fusion of simultaneous EEG-fMRI, blind deconvolution of brain responses in functional ultrasound during visual information processing or prostate cancer detection using ultrasound. The course explains how tensor networks pave the way towards Green AI. This is done by showing how machine learning methods like kernel machines, artificial neural networks, and Gaussian processes can be made more efficient and tractable for big data and higher dimensional problems.

The course consists of 8 2-hour lectures of theory, including examples and applications. There will be 4 2-hour computer lab sessions that discuss practical implementation and applications. These lab sessions help student complete their computer assignments, which are an important part of their assessment. The examples in the assignments are taken e.g. from biomedical applications (ECG, EEG data), computer vision, etc.

Learning objectives

After the course, the student is able to:

- Explain different tensor networks (TN)

- Implement basic tensor networks

- Formulate a basic data science / signal processing problem as a TN

- Make informed choices in the practical use of TNs, such as rank or initialization method.

- Formulate research questions for their own (master thesis level) research or independent literature study in the field and its applications, i.e. tensor-based (biomedical) signal processing, image analysis, control, etc.

Literature

The course covers chapters Part I and selected chapters of Part II of the book below. Further applications and examples will be provided from recent scientific literature and the teachers’ own research experience.

- Andrzej Cichocki, Namgil Lee, Ivan Oseledets, Anh-Huy Phan, Qibin Zhao and Danilo P. Mandic (2016), "Tensor Networks for Dimensionality Reduction and Large-scale Optimization: Part 1 Low-Rank Tensor Decompositions", Foundations and Trends® in Machine Learning: Vol. 9: No. 4-5, pp 249-429. http://dx.doi.org/10.1561/2200000059

- Andrzej Cichocki, Anh-Huy Phan, Qibin Zhao, Namgil Lee, Ivan Oseledets, Masashi Sugiyama and Danilo P. Mandic (2017), "Tensor Networks for Dimensionality Reduction and Large-scale Optimization: Part 2 Applications and Future Perspectives", Foundations and Trends® in Machine Learning: Vol. 9: No. 6, pp 431-673. http://dx.doi.org/10.1561/2200000067

Teachers

dr. Borbála Hunyadi

Biomedical signal processing, Tensor decompositions

Kim Batselier

Last modified: 2024-10-23

Details

| Credits: | 4 EC |

|---|---|

| Period: | 3/0/0/0 |

| Contact: | Borbála Hunyadi |